http://vincent.bernat.im/en/blog/2011-ssl-benchmark.html

Hitch (stud) : one-process-per-core model

stunnel: threaded model

nginx: https://www.nginx.com/blog/nginx-ssl/

http://vincent.bernat.im/en/blog/2011-ssl-benchmark.html

Hitch (stud) : one-process-per-core model

stunnel: threaded model

nginx: https://www.nginx.com/blog/nginx-ssl/

http://bird1110.blogspot.com/2011/01/using-stunnel-through-proxy.html

一般来说要搭建一个本地的 socks 代理只需要简单的 ssh -D, 就可以生成一个代理了. 这样作为零时方案挺不错的, 工作上服务器一般会被反向代理, 通过 ssh 就可以隐射到本地通过浏览器进行调试了, 但如果需要长期使用的话. 这样的方案也有不少的麻烦.

通过 ssh -D 他和服务器之间只会有一个连接, 这样网络性能上并不是最好的.

必须使用 openssh 才可以 -D, 一般路由器常用的 dropbear ssh 客户端不能使用 -D.

需要通过 autossh 自动重连, 但如果网络频繁切换(诸如 3G 和 wifi) 还需要辅助脚本来重启 autossh.

也不能提供例如用户名密码交验这样的安全认证功能.

基于以上这么多问题, 有条件的前提下还是自己在服务器端搭 socks 代理, 再通过 stunnel 或者 ssh -L 映射到本地是比较好的解决方案.

安装 socks 代理

方案上是在服务器搭建一个只能本地使用的代理, 再通过其他服务映射到本地. (这里用 danted 来搭 socks 代理. 服务器用的是 ubuntu)

apt-get install -y dante-server

安装完以后将 /etc/danted.conf 开启或加入如下配置

logoutput: syslog

internal: 127.0.0.1 port = 1080

external: eth0

clientmethod: none

method: none

user.privileged: proxy

user.notprivileged: nobody

user.libwrap: nobody

extension: bind

connecttimeout: 30

iotimeout: 86400

client pass {

from: 127.0.0.1/8 to: 127.0.0.1/8

log: connect error

method: none

}

client block {

from: 0.0.0.0/0 to: 0.0.0.0/0

method: none

}

pass {

from: 127.0.0.1/8 to: 0.0.0.0/0

command: bind connect udpassociate

log: connect error

method: none

}

pass {

from: 0.0.0.0/0 to: 127.0.0.1/8

command: bindreply udpreply

log: connect error

method: none

}

block {

from: 0.0.0.0/0 to: 0.0.0.0/0

log: connect error

}

按上述配置就可以搭出一个只能本地使用的 socks 代理, 接着用 service danted start 启动代理

服务端 stunnel 配置

stunnel 用来和客户端之间建立一条加密的链接, 这里还需要对客户端做认证, 确保只有拥有证书的客户端才可以链接. 终端输入:

apt-get install stunnel

安装好 stunnel 后还需要生成一个服务器的证书. 这里由于只用来加密通讯, 所以证书可以不用很复杂和正规.

cd /etc/stunnel

mkdir -p /var/lib/stunnel4/certs

ln -s /var/lib/stunnel4/certs /etc/stunnel/certs

openssl req -new -x509 -days 3650 -nodes -config /usr/share/doc/stunnel4/examples/stunnel.cnf -out stunnel.pem -keyout stunnel.pem

然后再编辑 /etc/stunnel/stunnel.conf

chroot = /var/lib/stunnel4/

setuid = stunnel4

setgid = stunnel4

pid = /stunnel4.pid

cert = /etc/stunnel/stunnel.pem

;key = /etc/stunnel/stunnel.pem

verify = 3

CApath = /certs

; performance

socket = l:TCP_NODELAY=1

[danted]

accept = 1081

connect = 1080

把本地的 1080 端口映射成 1081, 接着编辑 /etc/default/stunnel4 将其中的 ENABLED=0 修改成 ENABLED=1 然后再输入 service stunnel4 start 启动服务.

客户端 stunnel 配置

之前已经把服务器端配置完成了, 接下来需要在客户端配置 stunnel 好将端口映射到本地. 还是以 ubuntu 为例, 输入:

apt-get install stunnel

然后生成一个客户端的证书

cd /etc/stunnel

openssl req -new -x509 -days 3650 -nodes -out client.pem -keyout client.pem

这里需要注意的是为了区分证书, 每个证书都需要输入对应不同的信息, 然后将生成的 client.pem 复制到服务器的 /etc/stunnel/certs 目录下, 接着需要在 服务器 输入:

cd /etc/stunnel/certs

$(/usr/lib/ssl/misc/c_hash p.wido.me.pem | awk ‘{print “ln -s ” $3 ” ” $1}’)

stunnel 需要通过 c_hash 才能找到对应的证书.

然后在 客户端 加入配置文件 /etc/stunnel/client.conf

chroot = /var/lib/stunnel4/

setuid = stunnel4

setgid = stunnel4

pid = /stunnel4-client.pid

cert = /etc/stunnel/client.pem

client = yes

; performance

socket = r:TCP_NODELAY=1

[danted]

accept = 127.0.0.1:1080

connect = [HOST]:1081

其中的 [HOST] 为服务器的域名或者IP. 接着还是将 /etc/default/stunnel4 的 ENABLED=0 设置成 1, 接着启动 service stunnel4 start 就可以在客户端建立出一个端口为 1080 的 socks 代理了.

最后

由于服务器配了客户端证书认证, 所以只有添加证书的用户可以连上这台服务器. 同样的方法也可以映射其他的服务, 比如 polipo.

对于有些对 stunnel 支持不好的设备, 也可以用 ssh -L 来映射这个 1080 的端口而不用 -D 参数.

参考资料

http://www.bock.nu/blog/secure-firewall-bypass-danted-stunnel

Time sync over Http

INSTALL

wget http://www.vervest.org/htp/archive/c/htpdate-1.1.3.tar.gz

tar xvfz htpdate-1.1.3.tar.gz

cd htpdate-1.1.3

make

SYNC EXAMPLE

./htpdate -P PROXY_IP:PROXY_PORT -s www.linux.org www.freebsd.org

sudo ./htpdate -P PROXY_IP:PROXY_PORT -s www.linux.org www.freebsd.org

sudo ./htpdate -P PROXY_IP:PROXY_PORT -s http://www.ntsc.ac.cn/ http://mrtg.synet.edu.cn/ http://www.baidu.com http://www.taobhao.com

http://perceptionistruth.com/2013/05/running-your-own-dynamic-dns-service-on-debian/

I used to have a static IP address on my home ADSL connection, but then I moved to BT Infinity, and they don’t provide that ability. For whatever reason, my Infinity connection resets a few times a week, and it always results in a new IP address.

Since I wanted to be able to connect to a service on my home IP address, I signed up to dyn.com and used their free service for a while, using a CNAME with my hosting provider (Gandi) so that I could use a single common host, in my own domain, and point it to the dynamic IP host and hence, dynamic IP address.

While this works fine, I’ve had a few e-mails from dyn.com where either the update process hasn’t been enough to prevent the ’30 day account closure’ process, or in recent times, a mail saying they’re changing that and you now need to log in on the website once every 30 days to keep your account.

I finally decided that since I run a couple of VPSs, and have good control over DNS via Gandi, I may as well run my own bind9 service and use the dynamic update feature to handle my own dynamic DNS needs. Side note: I think Gandi do support DNS changes through their API, but I couldn’t get it working. Also, I wanted something agnostic of my hosting provider in case I ever move DNS in future (I’m not planning to, since I like Gandi very much).

The basic elements of this are,

In the interests of not re-inventing the wheel, I copied most of the activity from this post. But I’ll summarise it here in case that ever goes away.

You’ll need somewhere to run a DNS (bind9 in my case) service. This can’t be on the machine with the dynamic IP address for obvious reasons. If you already have a DNS service somewhere, you can use that, but for me, I installed it on one of my Debian VPS machines. This is of course trivial with Debian (I don’t use sudo, so you’ll need to be running as root to execute these commands),

apt-get install bind9 bind9-doc

If the machine you’ve installed bind9 onto has a firewall, don’t forget to open ports 53 (both TCP and UDP). You now need to choose and configure your subdomain. You’ll be creating a single zone, and allowing dynamic updates.

The default config for bind9 on Debian is in /etc/bind, and that includes zone files. However, dynamically updated zones need a journal file, and need to be modified by bind. I didn’t even bother trying to put the file into /etc/bind, on the assumption bind won’t have write access, so instead, for dynamic zones, I decided to create them in /var/lib/bind. I avoided /var/cache/bind because the cache directory, in theory, is for transient files that applications can recreate. Since bind can’t recreate the zone file entirely, it’s not appropriate to store it there.

I added this section to /etc/bind/named.conf.local,

// Dynamic zone

zone "home.example.com" {

type master;

file "/var/lib/bind/home.example.com";

update-policy {

// allow host to update themselves with a key having their own name

grant *.home.example.com self home.example.com.;

};

};

This sets up the basic entry for the master zone on this DNS server.

So I’ll be honest, I’m following this section mostly by rote from the article I linked. I’m pretty sure I understand it, but just so you know. There are a few ways of trusting dynamic updates, but since you’ll likely be making them from a host with a changing IP address, the best way is to use a shared secret. That secret is then held on the server and used by the client to identify itself. The configuration above allows hosts in the subdomain to update their own entry, if they have a key (shared secret) that matches the one on the server. This stage creates those keys.

This command creates two files. One will be the server copy of the key file, and can contain multiple keys, the other will be a single file named after the host that we’re going to be updating, and needs to be moved to the host itself, for later use.

ddns-confgen -r /dev/urandom -q -a hmac-md5 -k thehost.home.example.com -s thehost.home.example.com. | tee -a /etc/bind/home.example.com.keys > /etc/bind/key.thehost.home.example.com

The files will both have the same content, and will look something like this,

key "host.home.example.com" {

algorithm hmac-md5;

secret "somesetofrandomcharacters";

};

You should move the file key.thehost.home.example.com to the host which is going to be doing the updating. You should also change the permissions on the home.example.com.keys file,

chown root:bind /etc/bind/home.example.com.keys chmod u=rw,g=r,o= /etc/bind/home.example.com.keys

You should now return to /etc/bind/named.conf.local and add this section (to use the new key you have created),

// DDNS keys include "/etc/bind/home.example.com.keys";

With all that done, you’re ready to create the empty zone.

The content of the zone file will vary, depending on what exactly you’re trying to achieve. But this is the one I’m using. This is created in /var/lib/bind/home.example.com,

$ORIGIN .

$TTL 300 ; 5 minutes

home.example.com IN SOA nameserver.example.com. root.example.com. (

1 ; serial

3600 ; refresh (1 hour)

600 ; retry (10 minutes)

604800 ; expire (1 week)

300 ; minimum (5 minutes)

)

NS nameserver.example.com.

$ORIGIN home.example.com.

In this case, namesever.example.com is the hostname of the server you’ve installed bind9 onto. Unless you’re very careful, you shouldn’t add any static entries to this zone, because it’s always possible they’ll get overwritten, although of course, there’s no technical reason to prevent it.

At this stage, you can recycle the bind9 instance (/etc/init.d/bind9 reload), and resolve any issues (I had plenty, thanks to terrible typos and a bad memory).

You can now test your nameserver to make sure it responds to queries about the home.example.com domain. In order to properly integrate it though, you’ll need to delegate the zone to it, from the nameserver which handles example.com. With Gandi, this was as simple as adding the necessary NS entry to the top level zone. Obviously, I only have a single DNS server handling this dynamic zone, and that’s a risk, so you’ll need to set up some secondaries, but that’s outside the scope of this post. Once you’ve done the delegation, you can try doing lookups from anywhere on the Internet, to ensure you can get (for example) the SOA for home.example.com.

You’re now able to update the target nameserver, from your source host using the nsupdate command. By telling it where your key is (-k filename), and then passing it commands you can make changes to the zone. I’m using exactly the same format presented in the original article I linked above.

cat <<EOF | nsupdate -k /path/to/key.thehost.home.example.com server nameserver.example.com zone home.example.com. update delete thehost.home.example.com. update add thehost.home.example.com. 60 A 192.168.0.1 update add thehost.home.example.com. 60 TXT "Updated on $(date)" send EOF

Obviously, you can change the TTL’s to something other than 60 if you prefer.

The last stage, is automating updates, so that when your local IP address changes, you can update the relevant DNS server. There are a myriad ways of doing this. I’ve opted for a simple shell script which I’ll run every couple of minutes via cron, and have it check and update DNS if required. In my instance, my public IP address is behind a NAT router, so I can’t just look at a local interface, and so I’m using dig to get my IP address from the opendns service.

This is my first stab at the script, and it’s absolutely a work in progress (it’s too noisy at the moment for example),

#!/bin/sh

# set some variables

host=thehost

zone=home.example.com

dnsserver=nameserver.example.com

keyfile=/home/bob/conf/key.$host.$zone

#

# get current external address

ext_ip=`dig +short @resolver1.opendns.com myip.opendns.com`

# get last ip address from the DNS server

last_ip=`dig +short @$dnsserver $host.$zone`

if [ ! -z “$ext_ip” ]; then

if [ ! -z “$last_ip” ]; then

if [ “$ext_ip” != “$last_ip” ]; then

echo “IP addresses do not match (external=$ext_ip, last=$last_ip), sending an update”

cat <

http://www.foell.org/justin/diy-dynamic-dns-with-openwrt-bind/

http://blog.infertux.com/2012/11/25/your-own-dynamic-dns/

http://idefix.net/~koos/dyndnshowto/dyndnshowto.html

https://blog.hqcodeshop.fi/archives/76-Doing-secure-dynamic-DNS-updates-with-BIND.html

https://0x2c.org/rfc2136-ddns-bind-dnssec-for-home-router-dynamic-dns/

http://agiletesting.blogspot.com/2014/12/dynamic-dns-updates-with-nsupdate-new.html

http://www.codeceo.com/article/android-include-viewstub.html

当创建复杂的布局的时候,有时候会发现添加了很多的ViewGroup和View。随之而来的问题是View树的层次越来越深,应用也变的越来越慢,因为UI渲染是非常耗时的。

这时候就应该进行布局优化了。这里介绍两种方式,分别为<include>标签和ViewStub类。

使用<include/>是为了避免代码的重复。设想一种情况,我们需要为app中的每个视图都添加一个footer,这个 footer是一个显示app名字的TextView。通常多个Activity对应多个XML布局文件,难道要把这个TextView复制到每个XML 中吗?如果TextView需要做修改,那么每个XML布局文件都要进行修改,那简直是噩梦。

面向对象编程的其中一个思想就是代码的复用,那么怎么进行布局的复用呢?这时,<include/>就起作用了。

如果学过C语言,那么对#include应该不陌生,它是一个预编译指令,在程序编译成二进制文件之前,会把#include的内容拷贝到#include的位置。

Android中的<include/>也可以这么理解,就是把某些通用的xml代码拷贝到<include/>所在的地方。以一个Activity为例。

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android" android:layout_width="fill_parent"

android:layout_height="fill_parent" >

<TextView

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:layout_centerInParent="true"

android:gravity="center_horizontal"

android:text="@string/hello" />

<include layout="@layout/footer_with_layout_properties"/>

</RelativeLayout>

footer_with_layout_properties.xml中就是一个简单的TextView,代码如下:

<TextView xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:layout_alignParentBottom="true"

android:layout_marginBottom="30dp"

android:gravity="center_horizontal"

android:text="@string/footer_text" />

上述的代码中,我们使用了<include/>标签,达到了代码复用的目的。

但是,仍然存在一些疑惑。

footer_with_layout_properties.xml中使用了android:layout_alignParentBottom属性,这个属性之所以可行,是因为外层布局是RelativeLayout。

那么,如果外层布局换做LinearLayout又会怎样呢?答案显而易见,这肯定是行不通的。那么怎么办呢?我们可以把具体的属性写在<include/>标签里面,看下面的代码。

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android" android:layout_width="fill_parent"

android:layout_height="fill_parent">

<TextView

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:layout_centerInParent="true"

android:gravity="center_horizontal"

android:text="@string/hello"/>

<include

layout="@layout/footer"

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:layout_alignParentBottom="true"

android:layout_marginBottom="30dp"/>

</RelativeLayout>

我们直接在<include/>标签里面使用了android:layout_*属性。

注意:如果想要在<include/>标签中覆盖被包含布局所指定的任何android:layout_*属性,必须 在<include/>标签中同时指定layout_width和layout_height属性,这可能是一个Android系统的一个 bug吧。

在开发过程中,难免会遇到各种交互问题,例如显示或隐藏某个视图。如果想要一个视图只在需要的时候显示,可以尝试使用ViewStub这个类。

先看一下ViewStub的官方介绍:

“ViewStub是一个不可视并且大小为0的视图,可以延迟到运行时填充布局资源。当ViewStub设置为Visible或调用inflate()之后,就会填充布局资源,ViewStub便会被填充的视图替代”。

现在已经清楚ViewStub能干什么了,那么看一个例子。一个布局中,存在一个MapView,只有需要它的时候,才让它显示出来。

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent" >

<Button

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:layout_gravity="center_vertical"

android:onClick="onShowMap"

android:text="@string/show_map" />

<ViewStub

android:id="@+id/map_stub"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:inflatedId="@+id/map_view"

android:layout="@layout/map" />

</RelativeLayout>

map.xml文件中包含一个MapView,只有在必要的时候,才会让它显示出来。

<com.google.android.maps.MapView xmlns:android="http://schemas.android.com/apk/res/android"

android:id="@+id/map_view"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:apiKey="my_api_key"

android:clickable="true" />

另外,inflatedId是ViewStub被设置成Visible或调用inflate()方法后返回的id,这个id就是被填充的View的id。在这个例子中,就是MapView的id。

接下来看看ViewStub是怎么使用的。

public class MainActivity extends MapActivity {

private View mViewStub;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

mViewStub = findViewById(R.id.map_stub);

}

public void onShowMap(View v) {

mViewStub.setVisibility(View.VISIBLE);

}

@Override

protected boolean isRouteDisplayed() {

return false;

}

}

有的同学肯定会问,使用ViewStub和单纯地把View设置为View.GONE或View.VISIBLE有什么区别呢?不都是显示和隐藏吗,使用ViewStub反而更麻烦了。

确实是有区别的,会涉及到View树的渲染,内存消耗等。

至于有什么具体的差别,就请大家自己去Google吧。俗话说,自己动手,丰衣足食嘛!

http://code.google.com/p/android/issues/detail?id=2863

http://android-developers.blogspot.com.ar/2009/03/android-layout-tricks-3-optimize-with.html

http://developer.android.com/reference/android/view/ViewStub.html

http://cyrilmottier.com/2014/08/26/putting-your-apks-on-diet/

It’s no secret to anyone, APKs out there are getting bigger and bigger. While simple/single-task apps were 2MB at the time of the first versions of Android, it is now very common to download 10 to 20MB apps. The explosion of APK file size is a direct consequence of both users expectations and developers experience acquisition. Several reasons explain this dramatic file size increase:

Publishing light-weight applications on the Play Store is a good practice every developer should focus on when designing an application. Why? First, because it is synonymous with a simple, maintainable and future-proof code base. Secondly, because developers would generally prefer staying below the Play Store current 50MB APK limit rather than dealing with download extensions files. Finally because we live in a world of constraints: limited bandwidth, limited disk space, etc. The smaller the APK, the faster the download, the faster the installation, the lesser the frustration and, most importantly, the better the ratings.

In many (not to say all) cases, the size growth is mandatory in order to fulfill the customer requirements and expectations. However, I am convinced the weight of an APK, in general, grows at a faster pace than users expectations. As a matter of fact, I believe most apps on the Play Store weight twice or more the size they could and should. In this article, I would like to discuss about some techniques/rules you can use/follow to reduce the file size of your APKs making both your co-workers and users happy.

Prior to looking at some cool ways to reduce the size of our apps, it is mandatory to first understand the actual APK file format. Put simply, an APK is an archive file containing several files in a compressed fashion. As a developer, you can easily look at the content of an APK just by unzipping it with the unzip command. Here is what you usually get when executing unzip <your_apk_name>.apk1:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

|

Most of the directories and files shown above should look familiar to developers. They mostly reflect the project structure observed during the design & development process: /assets, /lib, /res, AndroidManifest.xml. Some others are quite exotic at first sight. In practice, classes.dex, contains the dex compiled version of you Java code while resources.arsc includes precompiled resources e.g. binary XML (values, XML drawables, etc.).

Because an APK is a simple archive file, it means it has two different sizes: the compressed file size and the uncompressed one. While both sizes are important, I will mainly focus on the compressed size in this article. In fact, a great rule of thumb is to consider the size of the uncompressed version to be proportional to the archive: the smaller the APK, the smaller the uncompressed version.

Reducing the file size of an APK can be done with several techniques. Because each app is different, there is no absolute rule to put an APK on diet. Nevertheless, an APK consists of 3 significant components we can easily act on:

The tips and tricks below all consist on minimizing the amount of space used per component reducing the overall APK size in the process.

It probably seems obvious but having a good coding hygiene is the first step to reducing the size of your APKs. Know your code like the back of one’s hand. Get rid of all unused dependency libraries. Make it better day after day. Clean it continuously. Focusing on keeping a clean and up-to-date code base is generally a great way to produce small APKs that only contain what is strictly essential to the app.

Maintaining an unpolluted code base is generally easier when starting a project from scratch. The older the project is, the harder it is. As a fact, projects with a large historical background usually have to deal with dead and/or almost useless code snippets. Fortunately some development tools are here to help you do the laundry…

Proguard is an extremely powerful tool that obfuscates, optimizes and shrinks your code at compile time. One of its main feature for reducing APKs size is tree-shaking. Proguard basically goes through your all of your code paths to detect the snippets of code that are unused. All the unreached (i.e. unnecessary) code is then stripped out from the final APK, potentially radically reducing its size. Proguard also renames your fields, classes and interfaces making the code as light-weight as possible.

As you may have understood, Proguard is extremely helpful and efficient. But with great responsibilities comes great consequences. A lot of developers consider Proguard as an annoying development tool because, by default, it breaks apps heavily relying on reflection. It’s up to developers to configure Proguard to tell it which classes, fields, etc. can be processed or not.

Proguard works on the Java side. Unfortunately, it doesn’t work on the resources side. As a consequence, if an image my_image in res/drawable is not used, Proguard only strips it’s reference in the R class but keeps the associated image in place.

Lint is a static code analyzer that helps you to detect all unused resources with a simple call to ./gradlew lint. It generates an HTML-report and gives you the exhaustive list of resources that look unused under the “UnusedResources: Unused resources” section. It is safe to remove these resources as long as you don’t access them through reflection in your code.

Lint analyzes resources (i.e. files under the /res directory) but skips assets (i.e. files under the /assets directory). Indeed, assets are accessed through their name rather than a Java or XML reference. As a consequence, Lint cannot determine whether or not an asset is used in the project. It is up to the developer to keep the /assets folder clean and free of unused files.

Android supports a very large set of devices at its core. In fact, Android has been designed to support devices regardless of their configuration: screen density, screen shape, screen size, etc. As of Android 4.4, the framework natively supports various densities: ldpi, mdpi, tvdpi, hdpi, xhdpi, xxhdpi and xxxhdpi. Android supporting all these densities doesn’t mean you have to export your assets in each one of them.

Don’t be afraid of not bundling some densities into your application if you know they will be used by a small amount of people. I personally only support hdpi, xhdpi and xxhdpi2 in my apps. This is not an issue for devices with other densities because Android automatically computes missing resources by scaling an existing resource.

The main point behind my hdpi/xhdpi/xxhdpi rule is simple. First, I cover more than 80% of my users. Secondly xxxhdpi exists just to make Android future-proof but the future is not now (even if it’s coming very quickly…). Finally I actually don’t care about the crappy/low-res densities such as mdpi or ldpi. No matter how hard I work on these densities, the result will look as horrible as letting Android scaling down the hdpi variant.

On a same note, having a single variant of an image in drawable-nodpi also can save you space. You can afford to do that if you don’t think scaling artifacts are outrageous or if the image is displayed very rarely throughout the app on day-to-day basis.

Android development often relies on the use of external libraries such as Android Support Library, Google Play Services, Facebook SDK, etc. All of theses libraries comes with resources that are not necessary useful to your application. For instance, Google Play Services comes with translations for languages your own application don’t even support. It also bundles mdpi resources I don’t want to support in my application.

Starting Android Gradle Plugin 0.7, you can pass information about the configurations your application deals with to the build system. This is done thanks to the resConfig and resConfigs flavor and default config option. The DSL below prevents aapt from packaging resources that don’t match the app managed resources configurations:

1 2 3 4 5 6 |

|

Aapt comes with a lossless image compression algorithm. For instance, a true-color PNG that does not require more than 256 colors may be converted to an 8-bit PNG with a color palette. While it may reduce the size of your resources, it shouldn’t prevent you from embracing the lossy PNG preprocessor optimization path. A quick Google search yields several tools such as pngquant, ImageAlpha or ImageOptim. Just pick the one that best fits your designer workflow and requirements and use it!

A special type of Android-only images can also be minimized: 9-patches. As far as I know, no tools have been specifically created for this. However, this can be done fairly easily just by asking your designer to reduce the stretchable and content areas to a minimum. In addition to optimizing the asset weight, it will also make the assets maintenance way easier in the long term.

Android is generally about Java but there are some rare cases where applications need to rely on some native code. Just like you should be opinionated about resources, you should too when it comes to native code. Sticking to armabi and x86 architecture is usually enough in the current Android eco-system. Here is an excellent article about native libraries weight reduction.

Reusing stuff is probably one of the first important optimization you learn when starting developing on mobile. In a ListView or a RecyclerView, reusing helps you keep a smooth scrolling performance. But reusing can also help you reduce the final size of your APK. For instance, Android provides several utilities to re-color an asset either using the new android:tint and android:tintMode on Android L or the good old ColorFilter on all versions.

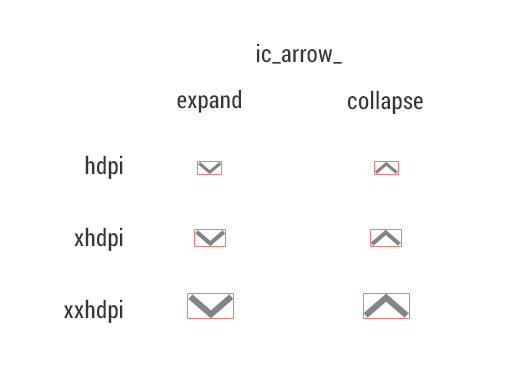

You can also prevent packaging resources that are only a rotated equivalent of another resource. Let’s say you have 2 images named ic_arrow_expand and ic_arrow_collapse :

You can easily get rid of ic_arrow_collapse by creating a RotateDrawable relying on ic_arrow_expand. This technique also reduces the amount of time your designer requires to maintain and export the collapsed asset variant:

1 2 3 4 5 6 7 |

|

In some cases rendering graphics directly for the Java code can have a great benefit. One of the best example of a mammoth weight gain is with frame-by-frame animations. I’ve been struggling with Android Wear development recently and had a look at the Android wearable support library. Just like the regular Android support library, the wearable variant contains several utility classes when dealing with wearable devices.

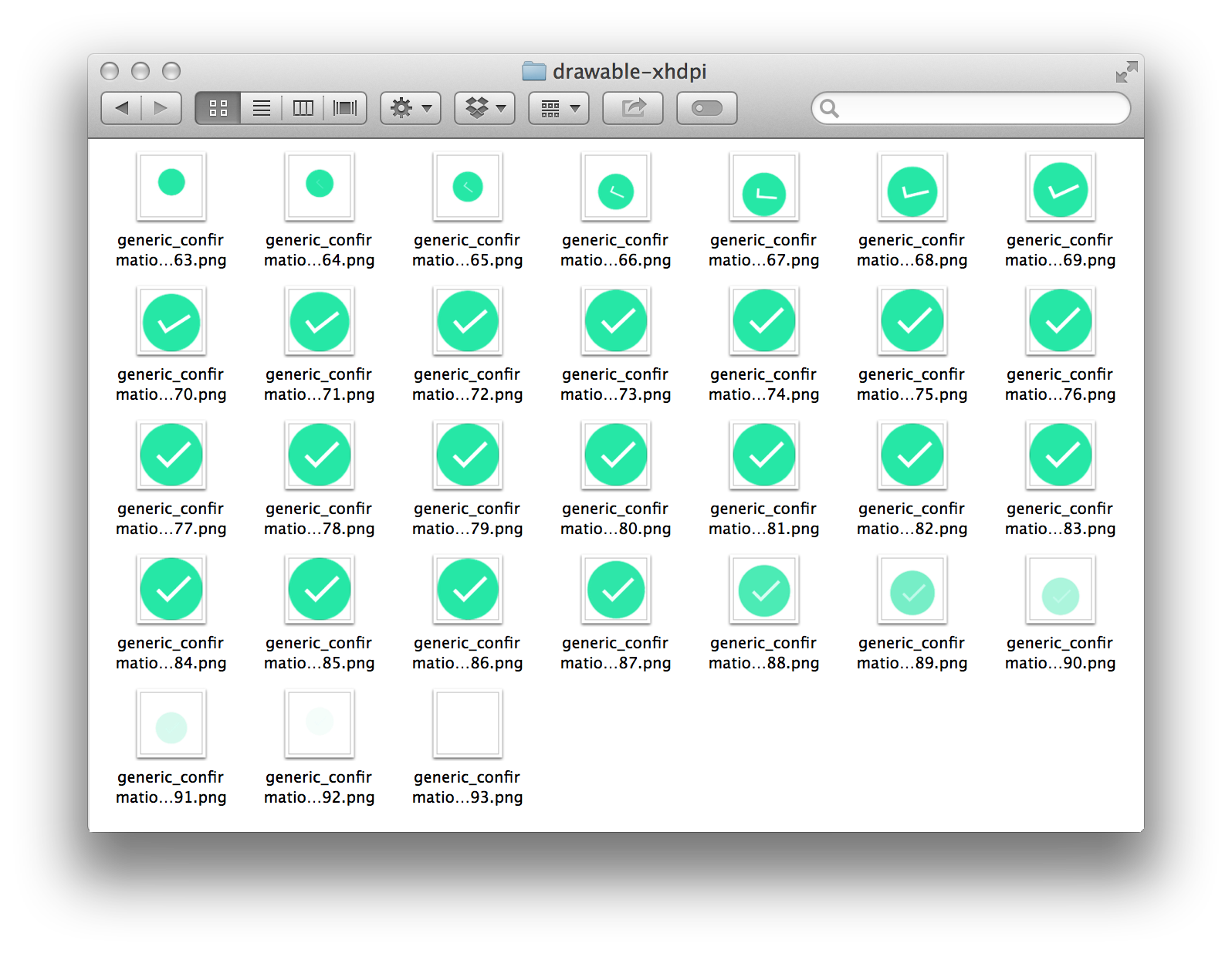

Unfortunately, after building a very basic “Hello World” example, I noticed the resulting APK was more than 1.5MB. After a quick investigation into wearable-support.aar, I discovered the library bundles 2 frame-by-frame animations in 3 different densities: a “success” animation (31 frames) and an “open on phone” animation (54 frames).

The frame-by-frame success animation is built with a simple AnimationDrawable defined in an XML file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

The good point is (I’m being sarcastic of course) that each frame is displayed for a duration of 33ms making the animation run at 30fps. Having a frame every 16ms would have ended up with a library twice larger… It gets really funny when you continue digging in the code. The generic_confirmation_00175 frame (line 15) is displayed for a duration of 333ms. generic_confirmation_00185 follows it. This is a great optimization that saves 9 similar frames (176 to 184 included) from being bundled into application. Unfortunately, I was totally disappointed to see that wearable-support.aar actually contains all of these 9 completely unused and useless frames in 3 densities.3

Doing this animation in code obviously requires development time. However, it may dramatically reduce the amount of assets in your APK while maintaining a smooth animation running at 60fps.. At the time of the writing, Android doesn’t provide a easy tool to render such animations. But I really hope Google is working on a new light-weight real-time rendering system to animate all of these tiny details that material design is so fond of. An “Adobe After Effect to VectorDrawable” designer tool or equivalent would help a lot.

All of the techniques described above mainly target the app/library developers side. Could we go further if we had total control over the distribution chain? I guess we could but that would mainly involve some work server-side or more specifically Play Store-side. For instance, we could imagine a Play Store packaging system that bundles only the native libraries required for the target device.

On a similar note, we could imagine only packaging the configuration of the target device. Unfortunately that would completely break one of the most important functionalities of Android: configuration hot-swapping. Indeed, Android has always been designed to deal with live configuration changes (language, orientation, etc.). For instance, removing resources that are not compatible with the target screen density would be a great benefit. Unfortunately, Android apps are able to deal on the fly with a screen density change. Even though we could imagine deprecating this capability, we would still have to deal with drawables defined for a different density than the target density as well as those having more than a single density qualifier (orientation, smallest width, etc.).

Server-side APK packaging looks extremely powerful. But is is also very risky because the final APK delivered to the user would be completely different from the one sent to the Play Store. Delivering an APK with some missing resources/assets would just break apps.

Designing is all about getting the best out of a set of constraints. The weight of an APK file is clearly one of these constraints. Don’t be afraid of pulling the strings out of one apsect of your application to make some other better in some ways. For instance, do not hesitate to reduce the quality of the UI rendering if it reduce the size of the APK and make the app smoother. 99% of your users won’t even notice the quality drop while they will notice the app is light-weight and smooth. After all, your application is judged as a whole, not as a sum of severed aspects.

Thanks to Frank Harper for reading drafts of this

.aar library extension is a pretty similar archive. The only difference being that the files are stored in a regular non-compiled jar/xml form. Resources and Java code are actually compiled at the very moment the Android application using them is built.Normally there are three different ways to do this:

A good article to start is Clean Code in Android Applications.

Ad 1) Two solutions, see

Ad 2) Android Annotations, see http://androidannotations.org/

Ad 3) Two solutions, see

http://www.technotalkative.com/lazy-android-part-7-useful-tools/